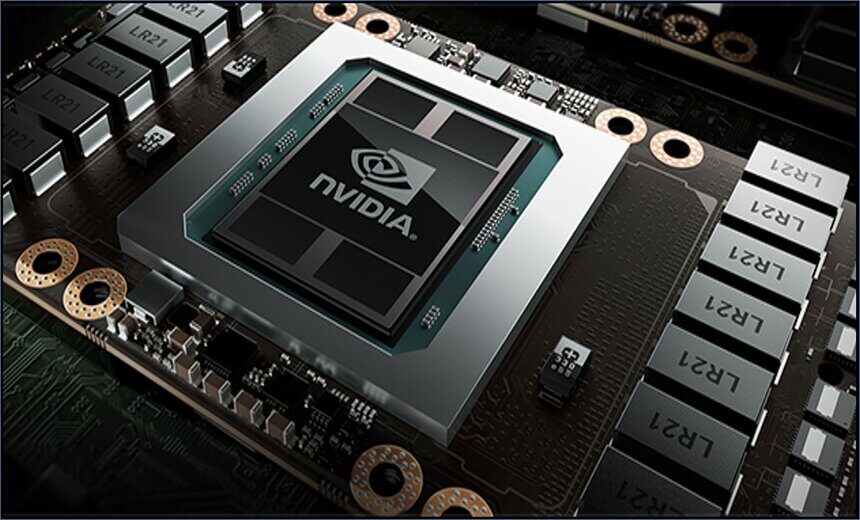

NVIDIA plays a pivotal role in powering modern AI workloads through its cutting-edge GPU architectures, which have evolved far beyond their original purpose of graphics rendering. At the heart of this transformation is the Blackwell architecture, a purpose-built AI superchip featuring fifth-generation Tensor Cores, NVFP4 4-bit floating-point precision, and NVLink‑72 high-bandwidth interconnects. These innovations enable ultra-fast GPU-to-GPU communication and efficient scaling across multi-GPU systems, making them ideal for training and deploying large-scale AI models. NVIDIA’s GPUs are now foundational to everything from generative AI and deep learning to scientific simulations and autonomous systems, offering unmatched parallel processing capabilities and energy efficiency.

Beyond hardware, NVIDIA amplifies its impact through a robust open-source software ecosystem. Tools like CUDA-X libraries, TensorRT, and RAPIDS accelerate the entire AI lifecycle—from data preparation to model training and inference—while platforms like NeMo and Dynamo democratize access to advanced AI capabilities. This synergy between hardware and software allows developers to move seamlessly from prototype to production, whether they’re working in cloud environments, data centers, or edge devices. By integrating open-source models and fostering innovation across industries, NVIDIA has positioned itself not just as a hardware provider, but as a cornerstone of global AI infrastructure.